Why I Built Claude Buddy: Bringing AI-Driven Development to Regulated Banking

In banking, every line of code is a liability.

That’s not hyperbole—it’s the reality I’ve navigated for over fifteen years as an enterprise architect in financial services. Every API endpoint, every database migration, every deployment carries regulatory weight. Auditors want to know who wrote it, why, and whether it was reviewed. The stakes are high: a bug in production isn’t just a bad user experience—it’s potential regulatory action, reputational damage, or worse.

So when AI coding assistants started making waves, I watched from a distance. The promise was intoxicating: 10x productivity, code written in minutes instead of hours. But I had questions that the demos never answered. How do you audit AI-generated code? How do you maintain governance when an AI is making architectural decisions? How do you explain to a regulator that your critical systems were “vibe coded” by a language model?

These weren’t theoretical concerns. They were blockers.

The Gap Nobody Was Addressing

As Head of Enterprise Architecture at a major Florida bank, I lead teams across development, integration, and infrastructure. We’re responsible for systems that process billions of dollars. The margin for error is zero.

When I evaluated AI coding tools in late 2024 and early 2025, I found a consistent pattern: they were built for startups. Fast iteration, minimal oversight, “move fast and break things.” That philosophy is antithetical to regulated finance. We can’t move fast. We can’t break things. We have to document everything.

The tools assumed a greenfield environment where the developer has full autonomy. Our environment couldn’t be more different:

- Compliance requirements: Every change needs an audit trail

- Risk-averse culture: Innovation happens within guardrails, not despite them

- Security concerns: AI tools accessing production codebases raised red flags with our security team

- Existing governance: We have established patterns, review processes, and documentation standards

The AI coding revolution was passing us by—not because the technology wasn’t ready, but because the tooling wasn’t built for us.

Discovering Claude Code

I’ll admit my initial skepticism when Anthropic launched Claude Code. Another AI coding assistant? I’d seen the pattern before: impressive demos, disappointing reality in enterprise contexts.

What changed my perspective was the architecture. Unlike autocomplete tools that suggest the next line, Claude Code operates as an autonomous agent. It understands entire codebases. It can execute multi-step tasks. More importantly, it maintains context across complex projects—exactly what enterprise development requires.

But the real breakthrough was recognizing what Claude Code could be with the right scaffolding. The raw capability was there. What was missing was the enterprise wrapper: the governance layer, the persona system for different expertise areas, the structured workflows that regulated industries demand.

That’s when I stopped evaluating and started building.

The Birth of Claude Buddy

Claude Buddy started as a personal experiment. I wanted to see if I could create a layer on top of Claude Code that enforced our enterprise patterns without sacrificing the productivity gains.

The core insight was simple: AI-driven development in regulated environments isn’t about unrestricted autonomy—it’s about guided autonomy. The AI should be incredibly capable, but it should operate within boundaries that satisfy compliance, security, and governance requirements.

Here’s what Claude Buddy provides:

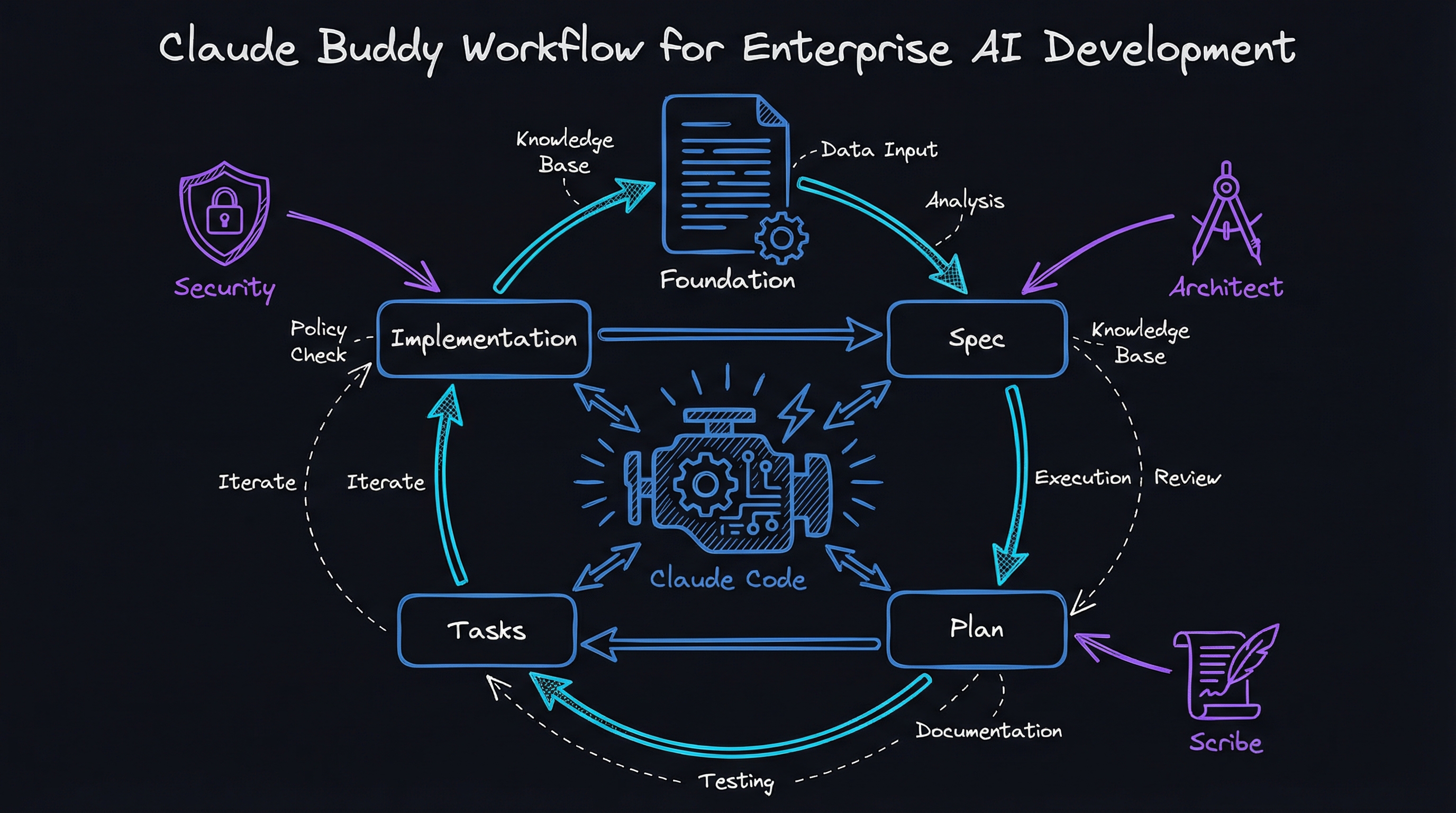

Structured Workflows: Instead of free-form “vibe coding,” Claude Buddy enforces a specification → plan → tasks → implementation flow. Every feature goes through documented stages. Every decision has a paper trail.

Persona System: Different phases of development require different expertise. The security persona catches vulnerabilities. The architect persona ensures patterns are followed. The scribe persona maintains documentation standards. These aren’t just prompts—they’re specialized skill sets that activate based on context.

Foundation Documents: Every project starts with a foundation that defines principles, patterns, and constraints. The AI operates within these boundaries, not around them.

Git Integration: Commits are analyzed, messages are generated following conventions, and the entire workflow integrates with existing version control practices.

I open-sourced it because the problem isn’t unique to my organization. Every enterprise architect in a regulated industry faces the same tension: how do you capture AI productivity gains without sacrificing the governance that keeps you compliant?

What Actually Works

After months of using Claude Buddy in production contexts, here’s what I’ve learned about AI adoption in regulated environments:

Governance-first approach wins. Don’t try to retrofit compliance onto AI workflows—build it in from the start. When the AI understands the constraints upfront, it makes better decisions.

Gradual adoption beats big bang. We started with documentation generation and test writing—low-risk, high-value use cases. As confidence grew, we expanded to more complex development tasks.

Audit trails aren’t overhead. The documentation that Claude Buddy generates isn’t just for regulators—it’s institutional knowledge. When someone asks “why was this decision made?” six months later, the answer is in the commit history.

The AI is a team member, not a replacement. The best results come from treating AI assistance as you would a junior developer: capable, fast, but requiring review and guidance.

What doesn’t work is pretending regulated industries can adopt AI the same way startups do. The “move fast” mentality creates technical debt that compounds into compliance risk. Every shortcut taken is a future audit finding waiting to happen.

Looking Forward

We’re at an inflection point. The enterprises that figure out AI-driven development within regulatory constraints will have a significant competitive advantage. Those that wait for perfect tooling will fall behind.

The technology is ready. The productivity gains are real. What’s needed is the organizational and tooling layer that makes AI safe for enterprise adoption.

That’s why I built Claude Buddy, and that’s why I’m sharing it. This isn’t just about my bank or my team—it’s about demonstrating that regulated industries can participate in the AI revolution without compromising the governance that protects customers and institutions.

If you’re an enterprise architect struggling with the same challenges, I’d love to hear from you. Check out Claude Buddy on GitHub, follow me on X or LinkedIn, and stay tuned for more posts on AI-driven development in regulated environments.

The future of enterprise development is AI-assisted. The question isn’t whether to adopt—it’s how to adopt responsibly.