What PAI's 7-Phase Loop Taught Me About Claude Buddy's Missing Piece

I’ve been running two AI systems in parallel.

On one side, there’s Daniel Miessler’s Personal AI Infrastructure—a unified cognitive operating system he calls Kai. It’s built for personal productivity: 65+ skills, voice integration, automatic learning capture, and a philosophy that “System > Intelligence.”

On the other side, there’s Claude Buddy, the enterprise governance layer I built for Claude Code. It’s designed for regulated environments: structured workflows, persona-based expertise, audit trails, and compliance-first design.

For months, I treated them as separate concerns. Personal projects got PAI’s full autonomy. Work projects got Claude Buddy’s guardrails. But recently, I started asking a different question: what could they learn from each other?

The answer revealed a gap I hadn’t noticed in my own tooling.

PAI’s Scientific Method

At the heart of PAI is a 7-phase loop that Daniel calls “the scientific method for AI work”:

- OBSERVE — Gather information, understand the current state

- THINK — Analyze, reason about what you’ve observed

- PLAN — Design the approach, consider alternatives

- BUILD — Create the implementation

- EXECUTE — Run it, deploy it, make it real

- VERIFY — Test, validate, confirm it works

- LEARN — Capture insights, update knowledge, improve for next time

This loop operates at every scale: individual tasks, projects, even career decisions. The critical insight Daniel emphasizes: “verifiability is everything. If you can’t tell whether you succeeded, you can’t improve.”

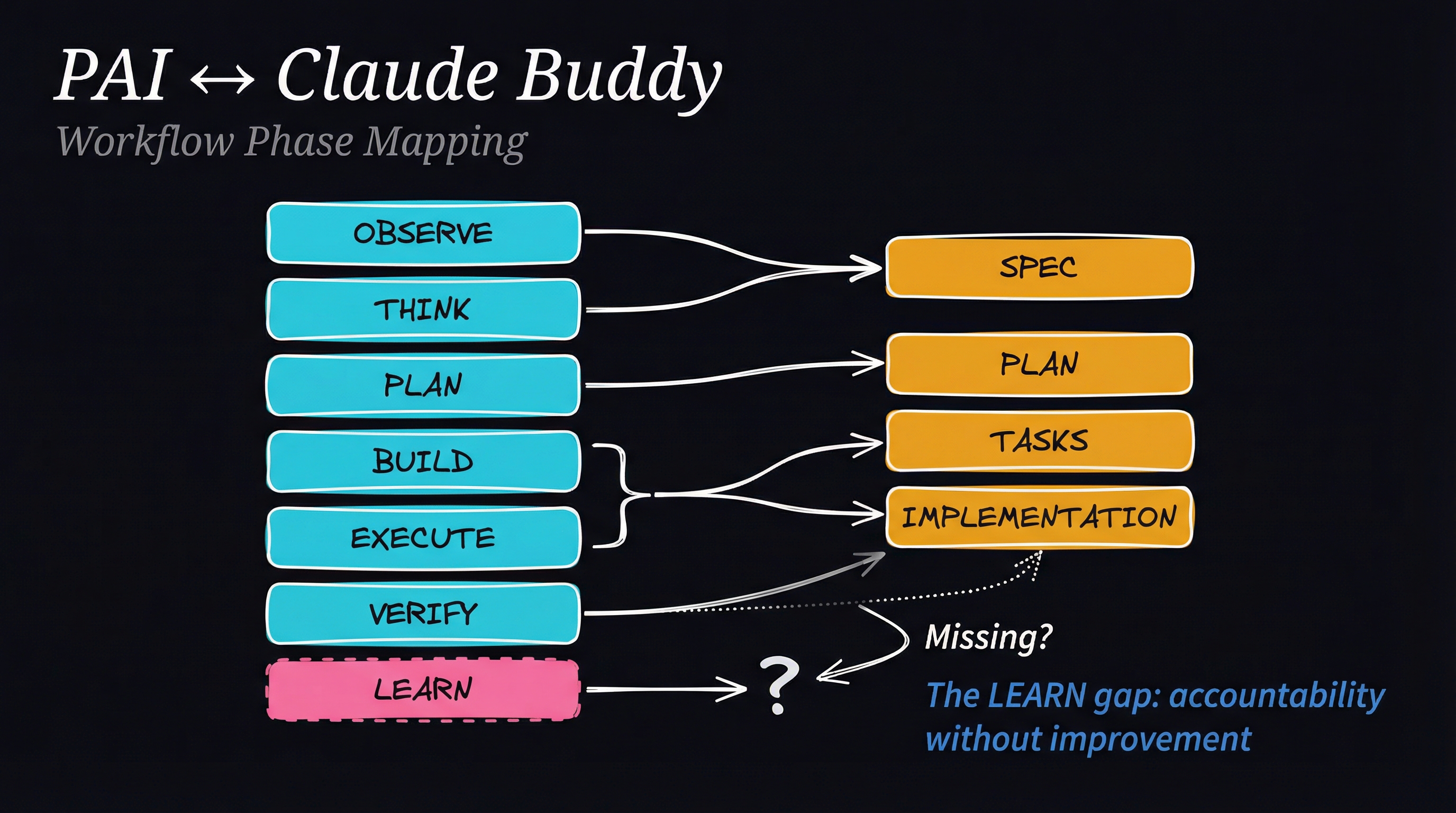

When I mapped this against Claude Buddy’s workflow, something interesting emerged.

The Mapping

Claude Buddy enforces a structured workflow for enterprise development:

- Specification — Document what you’re building and why

- Plan — Design the implementation approach

- Tasks — Break down into executable units

- Implementation — Build with persona-guided expertise

Here’s how the two systems align:

The mapping is surprisingly clean. PAI’s OBSERVE and THINK phases correspond to Claude Buddy’s specification work—gathering requirements, understanding constraints, analyzing the problem space. PLAN maps directly. BUILD and EXECUTE map to the Tasks and Implementation phases.

VERIFY exists in Claude Buddy through the QA persona, which activates during implementation to catch issues before they reach production.

But then I hit the gap.

The Missing Piece: LEARN

PAI has a dedicated system called UOCS—Universal Output Capture System—that automatically documents session transcripts, learnings discovered, research findings, and decisions made. Every interaction potentially improves future interactions.

Claude Buddy captures audit trails. It documents what was done and why. But it doesn’t systematically capture what was learned in a way that improves future work.

In enterprise environments, we’re meticulous about documenting decisions for compliance. But we’re terrible at documenting insights for improvement. The audit trail tells regulators what happened. It doesn’t tell the next developer what patterns emerged, what approaches failed, or what tribal knowledge was gained.

This is the gap: Claude Buddy is optimized for accountability, not learning.

Why This Matters for Regulated Industries

In banking, we have extensive post-mortems after incidents. We document root causes. We create action items. But that knowledge often dies in a Confluence page that nobody reads.

PAI’s approach is different. Learning isn’t a separate activity—it’s embedded in the workflow. Every session can update the system’s understanding. Skills evolve based on what works. The AI literally gets smarter about your domain over time.

Imagine if Claude Buddy captured not just “this commit implemented feature X” but also:

- “The initial approach using Strategy A failed due to constraint Y”

- “Pattern Z from the existing codebase proved more maintainable”

- “The security persona flagged issue W, which informed the final design”

That’s institutional knowledge that compounds. The next developer working on a similar feature doesn’t start from zero—they start from learned experience.

Integration Ideas

I’m not proposing a wholesale merge of PAI and Claude Buddy. They serve different masters: PAI serves the individual, Claude Buddy serves the organization. But the learning mechanism could bridge both.

Idea 1: Learning Hooks

Claude Buddy’s foundation documents already define project principles and constraints. What if they also defined learning categories? At session end, a hook could prompt: “What patterns or insights from this session should be captured?”

Idea 2: Persona Learning

Each Claude Buddy persona could maintain its own learnings. The security persona accumulates domain-specific knowledge about vulnerabilities encountered. The architect persona learns which patterns work in your codebase. Over time, the personas become genuinely specialized to your organization.

Idea 3: Compliant UOCS

PAI’s UOCS captures everything. In regulated environments, that’s problematic—you can’t just log all interactions when they might contain sensitive data. But a filtered, compliance-aware capture system could log insights without logging content.

The Bigger Picture

Daniel Miessler’s core philosophy—“System > Intelligence”—resonates deeply with what I’ve learned building enterprise AI tooling. A well-designed system with average models outperforms brilliant models with poor architecture.

Both PAI and Claude Buddy are systems for making AI more useful. PAI does it through personalization and learning. Claude Buddy does it through governance and structure. The future might be both: AI systems that are deeply personalized AND rigorously governed, that learn continuously AND maintain audit trails.

For now, I’m experimenting with adding learning hooks to Claude Buddy. The goal isn’t to replicate PAI—it’s to borrow the best idea and adapt it for enterprise constraints.

Because in regulated industries, the question isn’t whether AI will transform development. It’s whether we’ll build systems that actually get smarter over time, or just systems that document their mistakes really well.

If you’re exploring the intersection of personal AI infrastructure and enterprise tooling, I’d love to hear your approach. Find me on X or LinkedIn.